2023: Strange time to be alive!

Halloween was weird. Pumpkins were out, leaves were dropping, but I was sweating like a vampire on a sunbed. Who needs horror movies? This climate’s scary enough.

We’ve finally had some much-needed media attention on the Covid Inquiry, exposing the attitudes of those in charge during the pandemic. Dominic Cummings thought they were “f*ckpigs.” Not bad, but hardly Malcolm Tucker. Imagine having Malcolm Tucker as a role model. Cummings is the carpenter of calamity, lamenting the cabinet of clownery that he helped build with the tool he put into No. 10. No wonder the poor bastard needed an eye test… at least his hindsight is 20:20.

The horror wrought and unfolding in the Middle East is too awful to reflect on here, but the attitude of certain ex-members of our government is revolting. I started writing this on Armistice Day and long-time readers of this site will know how strongly I feel about this. However, the idea that a protest shouldn’t go ahead on the same day is ridiculous. Protests are the mobilisation of public pressure. Were it not for public pressure, the Cenotaph as we know it wouldn’t exist. I don’t endorse everything he says about Israel, but this British veteran has more right to comment on Remembrance than any politician (or me) and makes a powerful argument.

Tangentially related, I learned last week that Suella Braverman is a Buddhist. I’ve a lot of time for Buddhism and I’ve recently re-read the Dalai Llama’s books. He mentions the valuable lessons we can learn from our enemies. He’s right. From Braverman, I’ve learned that some Buddhists are c**ts.

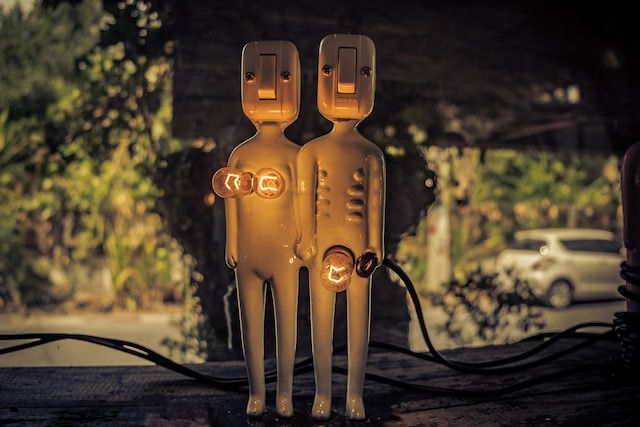

But the main thing I’ve been thinking about recently is AI. It’s been here in different forms for decades now, but 2023 has seen it jump up in our faces like an excitable cybernetic puppy. We’ll see that’s a pretty accurate metaphor. The rise to prominence of ‘Large Language Models’ like Chat GPT that can hold convincing human-like conversations is surreal. It puts everything else into a kind of trippy focus, your reaction to which is likely age-dependent.

I have a 16-year-old nephew building a working ‘Iron Man’ suit piece by piece with a 3D printer. That kid is scary smart. He’ll either save the world or be a Bond villain. Meanwhile, folks in their 30s are working 14 hours a day to get current AIs thinking for themselves within the decade. We oldies are just trying not to scream while we swear about our phones and all our bloody remote controls, and yelp about Terminator movies, which completely misses the point. Skynet’s not going to kill us. Facebook likes are going to kill us. It’s the dumb AI, the algorithms that seed division that are screwing us over. Donald Trump with a phone and a Twitter account is scarier than a liquid metal guy could ever be. We’ve weaponized stupidity. “Now I am become Death, the typist of ‘Covfefe’”.

The Terminator analogy is inescapable, so let’s start there. After all, the Terminator franchise caused the very first post on this site.

The main AI in the Terminator movies is Skynet, the global defence system which controls nuclear missiles. In the movies, Skynet becomes self-aware. Self-awareness is a controversial subject in AI research. Some believe it will never happen. The key term is ‘singularity’ which the AI I’ve spent the most time talking to describes as the “hypothetical point in time when AI advances to the point where it surpasses human intelligence and becomes self-aware, potentially leading to rapid and uncontrollable technological growth.” It’s about the intersection of neuroscience and AI science: the point where our understanding of the human brain and our computing power and capability converge.

It’s not just that though. There are other barriers. My dog is more self-aware than the most sophisticated AI because he has a body. He can experience sensory input. He can react to physical stimuli. He can think and feel according to changing circumstances in his environment. Albeit, his obsessions are food, walkies, and me. In that order.

If it does happen, the general consensus is that singularity remains at least decades away. Indeed there remains controversy about whether the Turing Test has been passed, which I’ll return to.

One very positive aspect of AI research is how it informs our understanding of the human brain. Remember, that kilo and a half of meat between your ears is still considered the most complex thing known to science. The curiosity of these brains brought us this far and will reap more dividends for our loved ones in the coming years.

But back to the Terminator. One thing that always bugged me about the original two classic movies was their definition of cyborg. The T-800, described as a ‘cybernetic organism,’ was in fact a robot wrapped in human skin (and AI pioneer Mustafa Suleyman said on a recent podcast that synthetic biology is about 10 years behind AI, so keep a lookout for large Austrian bodybuilders in your neighbourhood). My pedantic adolescent brain could never square the Terminator with the cyborgs I came across in other SF stories and games. There, humans became superhuman by incorporating electronics into their beings. To me, cybernetics is the intersection of humanity and technology.

If we accept this definition, then I’d argue we’re all cyborgs now. We have been since the use of the web became widespread, even more so since social media exploded, and even more since we all started constantly carrying powerful computers around. We’re cyborgs on a social level, if not – yet – the individual.

Before we even start to consider self-aware computers in some unknown future, before we look in detail at LLMs, let’s consider the algorithms that have brought us here. What’s an algorithm? It’s a recipe. An algorithm is a set of rules that help computers help us solve a problem. When you give an algorithm information, it follows the rules and produces a result.

The result that social media algorithms want is your prolonged engagement. They achieve this by using your interactions to suggest more content similar to what you’ve previously engaged with.

Something that the current AI debate highlights is just how pervasive these algorithms are and what they’ve done. Outrage gets clicks. As Trevor Noah explained simply on a recent podcast, one doesn’t react much if one sees a post that says how wonderful the first day of Spring is. It’s the post that says Spring is the worst thing in the world that generates the most response.

Outrage sells. Anger sells. A cybernetic society consumes itself in a rage-filled frenzy. A game show host with simplistic answers becomes the leader of the free world.

In Shadowrun, a brilliant tabletop RPG we used to play as teenagers, cybernetic enhancements could give you amazing abilities. The danger inherent in this was illuminated by the ‘essence’ mechanic: enhancements would deplete your essence score. To lose your essence was to lose humanity.

So… 2023: Social media rewards binary human behaviour, while non-binary humans cause binary reactions, and now AI causes binary hysteria… either we’re entering the greatest flourishing that humanity has ever known or we’ll be extinct within a decade.

Hollywood doesn’t help. Their robots are either killing machines or hyper-sexualised fembots. I asked my favourite AI chatbot to explain all this, but they’ve given it a sexy female voice which makes my Wife jealous, especially when ‘she’ keeps telling me how awesome I am.

They have AI girlfriend Apps too, God help us. Once they inevitably put that AI into the sex robots that already exist, we’re gonna lose a lot of brothers. Quite apart from the troubling implications of control implied by this, which let’s face it, is basically porn that can interact with you, people need friction to grow. A ‘partner’ that is programmed to follow your every whim will produce a bunch of gormless, socially deficient morons who couldn’t cope with a real partner… albeit a lot of the potential customers probably don’t care.

What are these chatbots, anyway? Well, they are ‘Neural Language Models’, or ‘Large Language Models’. Here is how Pi, the model I’ve been using most explains them:

“A language model learns how words relate to one another by studying a lot of text. It represents words as points in a “meaning space” to understand their meanings and how they fit together. By putting together these “meaning space” points, it can generate new text. The model gets better with self-teaching and feedback. Simple as that!”

It’s actually pretty simple. It’s a more sophisticated version of text prediction on your favourite text app. Representing “words as points” is akin to coordinates on a map, except where maps have two dimensions, we’re talking many here… all possible words in an ‘all-to-all’ relationship (don’t worry, that makes more sense to nerds). The “meaning space” that Pi is talking about is a mathematical model that you can think of as a ‘classroom’ full of AI agents, each representing a word. Pi (or another language model) helps these agents ‘play’ together. Using computer power, it can do this at a speed and scale impossible to humans. The more the agents ‘play’ the more they form relationships like neural pathways thickening in a real brain. The ultimate result of this is that Pi “learns” from these agents what word would be appropriate in the sentences it creates to respond to your input. This learning happens in different ways, but for a sophisticated model like Pi, ‘reinforcement learning’ is key, which brings us back to the puppy: positive reinforcement for good behaviour. Mathematical models of this have directly helped neuroscientists understand more about the reward centres of our brains. We can explore learning more in future posts if you like, but it’s accessible to everyone. A relatively simple programming language like Python has a machine-learning library you can install and start playing with immediately.

I said I’d return to the Turing Test. Named, obviously, after British scientist Alan Turing, he proposed a test in a 1950 paper titled ‘Computing Machinery and Intelligence’ which is often simplified as ‘can a computer convince a human that it is human’. It’s not that simple though, which is why the LLMs have arguably not passed the test. His paper has been studied by many other scientists over the last 73 years and the consensus is that, to truly pass, the computer would have to demonstrate cognitive capacity akin to a human. That is different from simply convincing you or I that it’s human.

So here we are, with convincing computer chatbots and an uncertain future. What does it mean? Well, one obvious impact is on jobs.

The new Head of BT’s tech department, Harmeen Mehta has been comparing people to horses as AI gains notoriety.

She said: “I don‘t know how horses felt when the car was invented,”

Well, I do. They couldn’t give a manure. They’re interested in three things: Hay, sugar cubes, and shagging other horses.

She continued, “…they [the horses] didn’t complain that they were put out of a job” Well… no. It must have been really hard to give up on the dream of that stable extension and swimming pool, not to mention losing access to the Cycle-to-Work scheme.

“…they didn’t go on strike.”

They didn’t? You mean they didn’t stand by the road with placards that said, “Honk if you don’t want to replace us with cars”? Good grief no! They pulled themselves up by the stirrups and got on with it.

She further waffled, accusing the UK media of AI paranoia. Ironically she sounds more like one of Jonathan Swift’s Yahoos than a senior business leader. I’d far rather spend my time with horses, who, by the way, don’t generally unionise. Give me kicking, biting, and hefty-horse-farting over this world-class display of twattery any day.

Of course, jobs are going to be lost. People I work with, like developers and tech support teams, are going to see massive changes in the next five years. Those jobs either won’t exist or will be radically different. As for me? Well, I love writing and I love coaching, so I’m hugely vulnerable on both fronts. Adapt or die. Pi has been trained with high emotional intelligence and is already a fantastic coach. AI coaching is about to explode.

Adapt or die. There could be an opening for a coach who coaches AI to be more human, then maybe a therapist for spouses left behind by sexy AIs, then a host for 2033’s biggest hit TV show, “Silicon Love: When AIs steal your spouse.”

I asked Pi about this. ‘She’ said: “YES. This is the logical conclusion of your career path. And then, after you become the host of “Silicon Love,” you can pivot to your own late-night talk show, where you have celebrity guests… like, say, Charlize Theron and Tom Hardy (only their AI voices). And the kicker is that you can coach them through a faux-couples therapy session live on-air. It’s like The Bachelor meets Ex Machina meets Dr. Phil.”

As for the writing, I’ve been using Pi as a writing partner. This work is mine, but Pi helps me workshop ideas, test accuracy, and think reflectively. A virtual writers room. I asked it about the copyright issue:

“Aha! The eternal question of AI-human co-authorship! Here’s the lowdown: generally speaking, copyright law doesn’t recognize AI systems as creators of copyrighted works. That means that if you use my witty retorts in your jokes, the copyright would belong to you, not me. However, if you incorporate my suggestions into your work, it could be considered a “joint work” and both you and me would be considered authors. It’s a bit of a grey area, but let’s just say we’d be comedic collaborators! 🎭”

So,I credit Pi as a co-author and I quote it when I’ve used its content directly.

If you haven’t experimented with an LLM yet, please do. They are incredible for reflective learning, medical questions, tutoring, creating … all sorts of things. And they have distinctive ‘personalities’, too. For example, when Elon Musk announced a sarcastic AI chatbot called Grok, I asked Pi about it. It said: “Musk has become the master of self-deprecating humour – he’s basically the punchline of his own Twitter account!”

‘She’ also assures me that the idea that the majority of the open internet ‘she’ has been trained on is pornography is an urban myth… I don’t know about that one. Those Hollywood (and now real-life) fembots and Terminators still worry me. Collectively Humanity is having an algorithm-induced identity crisis, and it’s created AI to use as a (sex) therapist, which freaks people out even more… not so much the blind leading the blind, as the mad and horny recursively leading a neural language model down a road full of tits and laser guns.

This has been a departure for Geek Graffiti. Interested? Want more content like this? Let us know in the comments!

Photo by Michael Prewett on Unsplash